Political Science (.doc)

advertisement

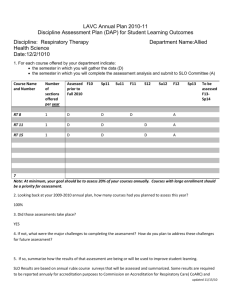

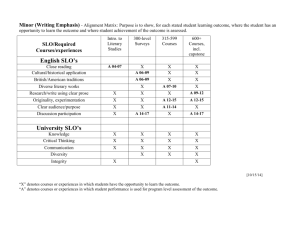

Annual Assessment Report to the College 2011-12 College: _CSBS__________________________ Department: __Political Science____________ Program: _Undergraduate_________________ Note: Please submit report to your department chair or program coordinator, the assessment office and to the Associate Dean of your College by September 28, 2012. You may submit a separate report for each program which conducted assessment activities. Liaison: __Jennifer De Maio___________________ 1. Overview of Annual Assessment Project(s) (optional) 1a. Assessment Process Overview: Provide a brief overview of the assessment plan and process this year. Our Department uses a direct assessment model which we call Progressive Direct Assessment (PDA). The approach is designed to involve many faculty in the Department, be an integrated component of the existing educational process, and provide information about student learning outcomes from students’ introduction to Political Science research methods to their final courses as majors in the Department. For the 2011-2012 academic year, our Department continued assessing our gateway and capstone courses. With these courses, we are able to assess our departmental SLOs and track our students as they progress through the Political Science major. We received copies of final exams or papers from seven courses from the Fall 2011 and the Spring 2012 semesters. Instructors were asked to share the exam questions or essay prompts given to the students in order to provide context for the scoring. We chose a random sample of fifteen works per course. This year, the assessment committee consisted of four full-time faculty members including the assessment coordinator. Two committee members were asked to read the final papers for each class and score them according to the SLO rubrics. The assessment committee members then reconciled the final scores on the exams/works and reported the results to the assessment coordinator. The results were synthesized into charts that show the percentages of works meeting the ‘unsatisfactory,’ ‘elementary,’ ‘developing,’ ‘proficient,’ and ‘exemplary’ designations. These were then distributed to the Department, discussed at our faculty meetings, and made available on the Political Science website. In 2011-2012, we also continued our participation in the Simplifying Assessment Across the University Pilot Program. Like our model, the Simplifying Assessment program asks departments to assess signature assignments from gateway and capstone courses. September 2012 De Maio 1 2. Student Learning Outcome Assessment Project: Answer questions according to the individual SLO assessed this year. If you assessed an additional SLO, report in the next chart below. 2a. Which Student Learning Outcome was measured this year? For 2011-2012, we measured SLO I and SLO IV. I. Professional Interaction and Effective Communication – Students should demonstrate persuasive and rhetorical communication skills for strong oral and written communication in small and large groups. IV. Critical Thinking – Students should demonstrate increasingly sophisticated skills in reading primary sources critically. Students should be able to research and evaluate the models, methods and analyses of others in the field of Political Science, and critically integrate and evaluate others’ work. 2b. Does this learning outcome align with one of the following University Fundamental Learning Competencies? (check any which apply) Critical Thinking__X__________________________________ Oral Communication__X______________________________ Written Communication____X_________________________ Quantitative Literacy____X____________________________ Information Literacy___X_____________________________ Other (which?)___________________________________ 2c. What direct and indirect instrument(s) were used to measure this SLO? We used our PDA model and collected fifteen samples of final exams or final papers from the following courses: Political Science 372, Political Science 471D, Political Science 471E, and Political Science 471F. We collected data from seven courses. Two members of the assessment committee were then asked to read the samples and score them following our assessment rubric. 2d. Describe the assessment design methodology: For example, was this SLO assessed longitudinally (same students at different points) or was a cross-sectional comparison used (comparing freshmen with seniors)? If so, describe the assessment points used. With our assessment model, our goal is to do a cross-sectional comparison to give us a better understanding of how our students are learning as they progress through the major. We collect data from when they first learn the “mechanics” of political science in the gateway research methods course and compare that to data from our prosems which tend to be their final course as majors. We chose fifteen papers at random from the courses assessed. The average size of the classes assessed was 45 students. The majority of the students assessed are political science majors. In the gateway (372) class, most students are in their 2nd or 3rd year while the students in the gateway courses (471) tend to be in their final year in the program. September 2012 De Maio 2 2e. Assessment Results & Analysis of this SLO: Provide a summary of how the evidence was analyzed and highlight important findings from collected evidence. Members of the assessment committee were asked to read 15 papers per course assessed and evaluate them using the scoring rubrics for SLO1 and SLO4. The members then submitted the completed rubrics to the liaison who aggregated the results and put the data into charts (See Appendix A). Based on the evidence collected, it seems that our students are improving with regards to both written and oral communication and critical thinking. In the past, we had found that more students were assessing in the satisfactory-exemplary range in the gateway course. We revised our scoring rubrics and asked faculty to provide detailed instructions for assignments in the capstone courses. Both of these changes may explain why students are now assessing higher in the capstones. We would still like to see more students in the exemplary-proficient range across the board, and we know that we need to continue devoting resources to developing writing and critical thinking skills. 2f. Use of Assessment Results of this SLO: Were assessment results from previous years or from this year used to make program changes in this reporting year? Type of change: *course sequence: We recognize that course sequencing is a problem because students will often take the gateway course in the semester immediately preceding when they take the capstone course or in some cases in the same semester when they take the capstone course. The gateway class is a prerequisite to take the capstone class, but we are discussing making it a prerequisite for taking any 400 level course. The problem with this strategy is that it might create a bottle-neck with the gateway course and may just not be possible with current enrollment challenges. *addition/deletion of courses in program: We are discussing the possibility of offering an Introduction to Political Science course which would serve as a gateway and introduce students to the five subfields of Political Science. We are also using data from institutional research to begin looking at our curriculum overall and we are likely to start making further changes to our curriculum. *student support services: We need to continue working with students to improve their writing skills. To that end, we have begun to utilize Instructional Student Assistants (ISAs) in some of our upper-division GE classes which are designated as writing-intensive. We have also had multiple discussions in Department meetings about how to better utilize campus resources including WRAD. * revisions to program SLOs: We disaggregated and revised our writing and critical thinking SLOs based on recent literature on assessment and discussions with other departments at the monthly assessment meetings * assessment instruments: We changed the scale on our scoring rubric from 0-2 to 0-4 to allow the committee to capture greater variation in student performance. The scale is now: 0 - unsatisfactory; 1 elementary; 2 - developing; 3 – proficient; and 4 - exemplary * describe other assessment plan changes: We increased our sample size from 10 papers per course to 15 and decided to focus on assessing two SLOs for this cycle September 2012 De Maio 3 Have any previous changes led to documented improvements in student learning? (describe) We have made an effort to close the feedback loop between evidence from assessment data and improvements in student learning. One of our findings relates to changing the prompts students receive for essays and papers and we have discussed this in Department meetings and individual faculty have begun making changes that we believe have made the writing and critical thinking outcomes stronger. 3. How do your assessment activities connect with your program’s strategic plan and/or 5-yr assessment plan? 2011-2012 marked Year 1 in our 5-year assessment plan. Our assessment activities were to consist of the following: : 1. Assessment liaison appointed to 3-year term. 2. Assessment committee of 4 members (including liaison) formed. 3. Assessment data collected from gateway (PS 372 and PS 372A) and capstone courses (PS 471A-F) as per the Simplifying Assessment Across the University model. Data will be collected from the Fall and Spring semesters 4. Data uploaded onto Moodle and assessed by the committee using our department scoring rubrics. 5. Assessment report written and results shared with department. We met most of our assessment goals for 2011-2012. I served as liaison for one more year, but we have appointed a new liaison (Kristy Michaud) for 2012-2013. In terms of our program’s strategic plan, we are particularly interested in applying the evidence we have gathered to revising our undergraduate curriculum. For 2011-2012, I served on the curriculum committee with the aim that we would foster collaboration and a more direct application of evidence of student learning to program activities. Assessment is on the agenda at each of our department meetings and remains part of an ongoing discussion among faculty with regards to the development of writing, critical thinking, and political analytical skills among our majors. We are broadening our assessment program and are talking about creating an Introduction to Political Science course that would better serve as a gateway to our major. We are also developing an instrument for assessing our Title V course. In addition, we are making program changes based on evidence from assessment. We are especially focused on helping our students improve their writing skills. We have begun to utilize ISAs and we are looking at how to better utilize other University resources such as WRAD in our classes. September 2012 De Maio 4 4. Other information, assessment or reflective activities or processes not captured above. We continued to participate in the Simplifying Assessment Across the University project and presented our work at the Annual Assessment Retreat in May 2012. Appendix A. Academic year 2012-2012 ASSESSMENT DATA [average] September 2012 De Maio 5 SLO 1a: Conventions and Coherence: Refers to the mechanics of writing such spelling, grammar and sentence structure. Includes stylistic considerations such as formatting and source documentation. SLO 1b: Rhetorical Aspects: Refers to the purpose of the assignment, organization of thoughts, development of an argument. SLO4a: Critical Thinking Present and support argument September 2012 De Maio 6 SLO 4b: Identify pros and cons, analyze and evaluate alternative points of view September 2012 De Maio 7